How AI can actually accelerate compliance efforts in financial services

Artificial Intelligence can empower financial services companies to more effectively comply with changing regulations

Governance, risk management, and compliance (GRC) are some of the most complex, stress-inducing and often misunderstood aspects of the financial services industry. Regulations are always evolving, as are the processes and systems that companies use to do business and manage GRC requirements.

To combat the growing complexity and bolster compliance efforts, emerging technologies like Artificial Intelligence (AI) have proven to be helpful. AI shows promise in helping to manage complicated and changing requirements, automating repetitive tasks, as well as giving human representatives more time to focus on strategic compliance efforts.

| Expert insight | “Amidst expanding regulatory requirements, compliance functions are under tremendous pressure to adapt to the risks of today’s ever more interconnected and digitised landscape at speed and scale. Financial services firms are showing a greater willingness than ever before to invest in regulatory risk and compliance programs.” Frank Ewing, CEO AML Rightsource |

| By the numbers | - 76% of financial services firms have seen increased compliance expenditure over the past year - 39% of financial services business and IT leaders expect IT budgets to grow in 2024 due to regulatory issues or concerns* - Financial institutions globally are paying $206.1 billion on compliance - Organisations that used security AI and automation extensively reported an average of $1.76 million lower data breach costs per company compared to those that didn’t use AI |

* Data source: Financial Services Business Priority Tracker 4Q23 2024 Gartner, Inc. and/or its affiliates. All rights reserved. 807706_C GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

The growing importance of regulatory compliance technology

Due to increased regulatory requirements and heightened attention to compliance, many financial services companies are increasing their focus on, and investment in, ensuring that they’re compliant. Estimates show that financial institutions will increase their spending on regulatory technology (RegTech) investments by approximately 124% between 2023 and 2028.

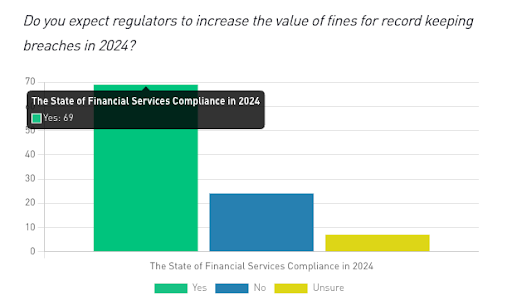

Driving this additional investment is the increase in fines for noncompliance with record keeping, and other regulations. In a survey, 69% of financial services executives reported that they expect regulators to increase the value of fines for record-keeping breaches.

In February 2024 alone, the SEC imposed $81 million in fines for record-keeping infractions. Globally, fines have been assessed to companies that failed to record or retain electronic communications information. Some of the largest institutions including Wells Fargo, JP Morgan, Goldman Sachs, Morgan Stanley and Citigroup have been punished with fines in the hundreds of millions, with total penalties exceeding $2 Billion.

Factors contributing to the increasing number of regulations and strict enforcement include global geopolitical conflicts and changes, technological advancements in financial industry systems, and the use of artificial intelligence in financial crime.

Compliance priorities for financial services companies

Changing circumstances in global markets, developments in technology, and organisation-specific strategies can affect which of the many areas of compliance pose the greatest risk, and therefore require the greatest attention from financial service company leaders.

Some of the current areas that many organisations are focusing on are: Artificial Intelligence (AI), operational resilience, compliance risk assessment, compliance monitoring, and environmental, social and governance (ESG issues).

| Nearform poll | What compliance issues are top of mind for you? Share your thoughts in our very short (3-question) poll |

How RegTech supports compliance

Compliance and governance are typically already part of most digital systems, and there are a number of different procedures, checks, and monitoring capabilities that assist compliance efforts. These include regulatory compliance checks such as Anti-Money Laundering (AML), Know Your Customer (KYC), General Data Protection Regulation (GDPR), and others. Data encryption and security measures are required to keep customer data secure, and records of all user activities and transactions (audit trails) are required in order to show that organisations are transparent and accountable.

Beyond the digital applications and modules that support compliance, there are also important actions that employees and staff are required to undertake. These include participation in compliance training and awareness programs, and conducting regular audits and assessments. Well-designed systems and thorough, informed oversight by human auditors can keep organisations from falling out of compliance, but the increasing complexity of regulatory requirements makes it more challenging to keep up with all relevant regulations.

The present and future of AI-powered GRC

GenAI (generative AI) is proving to be a valuable asset in the area of regulatory compliance. When trained with information about regulations and policies, it can serve as a virtual “expert”, helping to assess compliance by comparing required policies and regulations with company policies, and answering user questions about regulations. Developers are using it as a code accelerator as well, checking code for any misalignment with policy. When it detects possible breaches, it can alert the necessary people, and detail the situation clearly.

Additionally, AI can be very good at detecting and mitigating cyberfraud. When trained with lots of data showing what legitimate and fraudulent transactions look like, AI systems can monitor activity, then identify and block potentially fraudulent transactions. It can also communicate with customers to ask for more information or confirm details, which minimises the threat from identity theft, phishing attacks, credit card theft, and document forgery.

Task automation is another area where AI excels. It speeds up completion of repetitive processes like document verification, data analysis, transaction monitoring and more, and eliminates mistakes caused by human error.

| Nearform expert insight | "Maintaining AI policies can be tedious, but leveraging AI removes human drudgery from the process. By continuously updating, indexing, and integrating best practices and regulations, AI eases the burden of policy maintenance. AI-driven policy experts also enhance accessibility and compliance, making policies not only relevant but also user-friendly and effective." Joe Szodfridt, Senior Solutions Principal, Nearform |

The flip side of AI: Compliance concerns

The promise of AI to help support compliance is counterbalanced by concerns about vulnerabilities that it may be used to exploit. AI has the potential to cause harm by facilitating cybercrimes, impersonating humans, and presenting incorrect information as correct. Concerns such as these are driving many governments around the world to consider instituting regulations to govern it. These regulations and national policies are evolving as quickly as the technology itself, so AI-specific updates to existing rules and new additions will soon be in effect.

Adding to the complexity for international organisations, regulations will differ by country, and sometimes, even within countries or regions.

For example, while AI policy is still in development in the US, indications are that different agencies will have the ability to create their own rules. Having several sets of rules to follow from different agencies or even from different states will add levels of complexity to compliance efforts.

The UK is seeking to be pro-innovation, encouraging AI development and setting broad policy guidelines while emphasising five cross-sectoral principles for existing regulators to interpret and apply. Those principles are: safety, security and robustness, appropriate transparency and explainability, fairness, accountability and governance, and contestability and redress. Since regulators are still in the process of creating a cohesive policy, there’s no specific timeline for when regulations will be released,

While AI policy is still under development in these countries and others like China, Australia, and Brazil, The EU has produced the world’s first comprehensive law governing AI, which will come into effect in 2024. This law will ban some uses of AI, require companies to be transparent about how they develop and train their models, and hold them accountable for any harm. It will also require AI-generated content to be labelled, and create a system for citizens to lodge complaints if they believe they have been harmed by an AI system.

Beyond regulatory compliance, AI applications for financial services companies should focus on broader risk management

First and foremost, responsible AI development means ensuring that people are guiding and reviewing the results of AI models. There’s no substitute for human verification that models are responding accurately, giving correct results, and that the data is correct and unbiased.

In addition to keeping humans in the loop, McKinsey outlines some steps that financial organisations should take to manage the risks of GenAI:

Ensure that everyone across the organisation is aware of the risks inherent in GenAI, publishing dos and don’ts and setting risk guardrails.

Update model identification criteria and model risk policy (in line with regulations such as the EU AI Act) to enable the identification and classification of GenAI models, and have an appropriate risk assessment and control framework in place.

Develop GenAI risk and compliance experts who can work directly with frontline development teams on new products and customer journeys.

Revisit existing know-your-customer, anti-money laundering, fraud, and cyber controls to ensure that they are still effective in a GenAI-enabled world.

Preparing for the coming era of AI-enabled compliance

Despite valid concerns, Artificial Intelligence can empower financial services companies to more effectively comply with changing regulations. The concerns should not be ignored, but with responsible and transparent development, they can be neutralised.

As AI continues to grow in importance, overly cautious adoption may lead to competitive disadvantages, while overly rapid implementation could introduce serious compliance risks. Partnering with an experienced AI and data consultancy such as Nearform can ensure that organisations take a balanced approach that is tailored to their needs.

With a proven track record of working with major financial services organisations and global enterprises, Nearform helps unlock the value of AI safely and strategically, positioning companies for future success in a rapidly evolving landscape.

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact